Reinforcement Learning

Finding optimal path in unknown mazes

(by A* and Dynamic Programming)

Navigating in unknown real world is a key challenge in autonomous vehicle or mobile robot application. The problem is simplified as a robot navigating in an unknown maze and finding its optimal path. A motion agent for the robot has been developed and validated in the simulation. The approach is based on A* like algorithm with heuristic function and the number times of path traveled to learn the maze in the training run; and Dynamic programming method to find the fastest path in the testing run. By comparing with benchmark metrics, the test results proved that the robot was able to complete the mazes and find the fastest path within a very reasonable time.

Finding optimal path in unknown mazes (by Q-Learning)

The Micromouse problem is solved with Q-Learning method. The Q-Learning method would require much more training time to learn the maze than the A* + DP approach. However, programming is simpler, and it is able to find the optimal path with sufficient training.

Cart-Pole from OpenAI Gym (by Simple Random Gains)

To learn more about Reinforcement Learning for controlling a dynamic system, the inverted pole on the cart problem from OpenAI Gym is tried out.

Main alogrithm comes from:

http://kvfrans.com/simple-algoritms-for-solving-cartpole/

It is a linear state feedback control with discrete output, 0 or 1.

Feedback gains are selected randomly, but it works surprisingly well.

It is to be used as a benchmark for future Reforcement Learning developemnt.

Deep Learning Classifier

Hand Writing Recognition (Kaggle Digit Recognizer Competition - MNIST)

Applied convolutional neural network (Keras + TensorFlow + TensorBoard) to MINST dataset. The CNN is based on a VGG like architecture for the images with 28x28x1 and achieved 99.37% accuracy with test data set, ranking at the top 9% at Kaggle Digit Recognizer competition. I believe that 99.37% accuracy would better than the level any human can do.

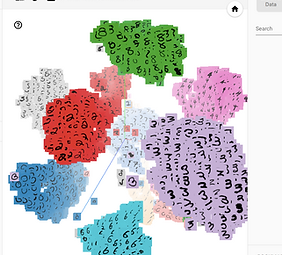

The Tensorboard-enabled embedding visualization is used to confirm the proper clustering of similar images (unlabeled data set).

- link to GitHub

State Farm Distracted Drivers Detection (Classification)

Built a deep learning model to detect distracted drivers from images. The data set from State Farm Kaggle competition is used for the development. The model is based on pretrained VGG model with ImageNet data and then re-trained with State Farm dataset. ~93% accuracy is achieved for 10 categories classification:

c0: normal driving

c1: text - right

c2: talking on the phone - right

c3: text - left

c4: talking on the phone - left

c5: operating the radio

c6: drinking

c7: reaching behind

c8: hair and makeup

c9: talking to passenger

Image Classification and Analysis thru Deep Learning API

This is a simple Python Flask web app to demonstrate image classification and analysis using Deep Learning. After uploading images from the local directories of your computer, the web app dectects and analyzes objects from the image, and print out key features to provide the context of the images. For example, 'sky', 'freedom', 'fun', 'people', 'flying', 'adults', 'outdoors', 'motion', ........

Object Detection

Image Detection (Classification+Regression) for Pictures and Videos

Applied the state-of-the-art deep learning frameworks to detect multiple objects in the images in near real time without sacrifying accuracy. Currently achieved mAP 74% at 59 FPS with VOC 2007 dataset (compared to Faster RCNN, mAP 73% at 7 FPS; YOLO mAP 63% at 45 FPS) - Tensflow, KERAS, VGG

Transfer Learning of Image Detection

Pre-trained with imagenet and then re-train top layer only with new dataset (custom). The code demonstrates detecting and classifying fish from the image data set: https://www.kaggle.com/c/the-nature-conservancy-fisheries-monitoring/data.

Building Detection with TensorBox - Spacenet Dataset

TensorBox developed by R. Stewart is applied to detect buildings on SpaceNet dataset. It is an the end-to-end objects detection algorithm that directly predicts bounding boxes and object class confidences through all locations and scales of an image. Specifically, GoogLeNet-LSTM-Rezoom is used for the final model.

The algorithm is implemented with AWS, GPU enabled EC. It took about 7 hours for 150K iterations.

- link to GitHub

CV for Automotives

Lane and Objects Detection with OpenCV and MobileNet-SSD

Applied OpenCV to detect the lanes based on the multiple channels and deployed a lighter version of deep learning algorithm, mobilenet-SSD to detect objects from mobile devices. It was developed under Tensorflow framework.

3D Pose Detection and Tracking from a Mono Camera

Developed 3D pose objects detection and tracking with a mono camera. It is based on DNN, Kaman filter, and Hungarian algorithm, and able to estimate the 3D position and orientation of multiple objects and track them thru a sequence of images.

Inventory Monitoring Drone

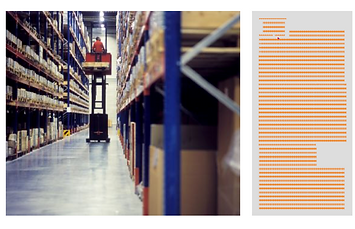

Warehouse Inventory Monitoring Drone - Indoor Vision Based Autonomous Flight

Flying drones autonomously at the warehouses where gps signal is denied. Used two off-the-shelf stereo cameras for drone localization and navigation. To achieve +/- 10 cm accuracy, Apriltags are installed at the site mitigating occasional drifts.

The drone has two on board computers: Intel i7 Nuc for primary communication and rack analysis - OCR and bar coding reading; NVIDIA TX2 for navigation and AI work.

Box Detection, Depth Calculation and 3D Reconstruction with Stereo Camera

TensorFlow + ROS running on NVIDIA TX2 to detect the boxes on the rack - SSD + MobileNet; Depth estimation and occupancy grid were performed thru point clouds generated by a stereo camera - Octomap.

Stereo Cameras

Monitoring Social Distancing with Deep Learning + Stereo Camera

Social distancing violation is detected with deep learning algorithm and a stereo camera. The stereo camera generates point cloud to provide bird's eye view of the detected objects. Therefore, it provides with more reliable and accurate warnings when 6 ft distancing is violated.

3D Pointcloud and SLAM

Visual SLAM with Intel RealSense D435 Depth Cameras

Performed SLAM (Simultaneous Localization and Mapping ) to estimate the location of products in a store and map its environment with Intel D435 and NVIDIA AGX Nano on a cart. It initialized with an Apriltag at the starting point , ran "rtabmap_ros" for SLAM algorithm and used "YoloV5" for product detection and out-of-stock identification.

3D PointCloud Construction with Multiple Stereo Cameras

Constructed 3D pointcloud of an object using four Intel Realsense cameras. Overcame technical challenges such as multiple cameras calibration and synchronization with OpenCV and Open3D .

Multiple Camera CV

Multiple Cameras and Multiple People Tracking

Tracking multiple people with multiple camera to overcome occlusion and re-entry. Applied object detection, key point tracking, re-ID to consolidate and maintain unique tracking ID per person. It ran on NVIDIA AGX Xavier with 4 GMSL cameras and NVIDIA Deepstream, and achieved ~10 frames/sec performance.

Product Recognition with Multiple Cameras

Recognized products on the checkout counter by aggregating 4 camera views and applying deep learning CV. It was based on YoloV7 segment, SORT, and a fine-grain classifier on NVIDIA AGX Xavier.

Vision Language Model with Live Video Streaming

Ran VILA1.5-3B model on Jetson AGX Orin 64GB using NVIDIA Agent Studio framework. Demonstrated the feasibility of running a small VLM one the edge with a reasonable frame rate ~ 8 frames/sec.

Fine-tuning a small VLM model may be required to a domain specific application to achieve the desired accuracy/performance of the model.

Supervised Learning

Movie Review Classification

Demonstrated movie review classification predicting positive or negative from your written review comments.

Link to Web App (temporally disconnected)

Predictiong Boston Housing Prices

Boston Housing dataset contains aggregated data on various features for houses in Greater Boston communities, including the median value of home for each of those areas. An optimal model based on a statistical analysis is developed first. Then the model is used to estimate the best selling price for a home. DecisionTreeRegressor and GridSearchCV from sklearn are used.

Some portion of code is imported from Udacity MLND assignment 2.

Predicting Student Intervention

Analyzed the data set on student's performance and develop a model that will predict the likehoold that a given student will pass, quantifying whether an intervention is necessary. Three classification methods are initially applied and compared: AdaBoost, Naive Bayesian and support vector machine. The AdaBoost model was picked and further fine-tuned with GridSearchCV().

Some portion of code is imported from Udacity MLND assignment 1.

Unsupervised Learning

Customer Segments

Used unsupervised learning techniques to see if any similarities exist between customers, and how to best segment customers in distinct categories. One goal of this project is to best describe the variation in the different types of customers that a wholesale distributor interacts with. Doing so would equip the distributor with insight into how to best structure their delivery service to meet the needs of each customer.

PCA and KMeans from sklearn used for data dimensioni reduction and clustering.

Some portion of code is imported from Udacity MLND assignment 3.

Mobile OCR (Optical Character Recognition) with OpenCV

Convert Currency

ConvertCurrency is an iOS app to make currency conversion simple, easy ans accurate. It helps your trip to abroad to be more enjoyable. It uses optimized Optical Character Recognition (OCR) technology to read instaneously one currency and converts to the other currency without any input. It utlizes the most recent exchange rate available from internet. It is based on swift and openCV.

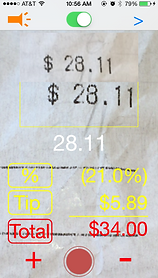

Tip Properly

TipProperly is an iOS app to make tip calculation simple, easy and fair. It provides saving opportunities to an user; and encourage good service and discourage bad service by tipping properly - eliminate reading/rounding error. It uses the optimized Optical Character Recognition (OCR) technology to read number instantaneously and calculates tip automatcally with user options. Pinch to zoom and flashlight support help reading under the poor ambient light condition. It is based on swift and openCV.